In what has become an ongoing problem, a blogger has come forward with reports of troublesome information suppression on Facebook. Jeremy Ryan, of the site Addicting Info, recently reported continued reprimands he’s been getting from Facebook due to people submitting false abuse reports.

Trolls are now having activists removed by filing fake Facebook complaints. That is right, people are suppressing information in Wisconsin by actively reporting people they deem to be a threat on Facebook. I myself have been reported and banned for one to three days for simply posting “Good job” or “The majority of Wisconsin doesn’t like Scott Walker.” People have been reported on pages for saying nothing more than my name and have been reprimanded by Facebook. The strategy is simple and Facebook lets it continue. If someone reports something as abusive to Facebook they don’t actually look at it, they just remove it and warn the person who posted it. If you get enough you are not able to dispute them at all, and with no admin contacts and no one at Facebook actually looking at the posts reported as “abusive,” the person gets blocked.

David Badash, editor of The New Civil Rights Movement, reports similar experiences twice in the last month, first on October 15, 2011:

With no warning, and for no discernible reason, after posting a link to the piece on a very few “Occupy” sites (perhaps three?), I received a notice (image, right) from Facebook that claimed I was posting “spam and irrelevant content on Facebook pages.” The notice also came with the note that my account was being disable for fifteen days from posting any content on pages that were not mine.

In other words, Facebook had “decided” that the content I was sharing wasn’t content, that it was “spam,” or “irrelevant,” despite the very clear fact that it was neither.

Facebook offered no opportunity to challenge its decision, no option to protest, no option to appeal. Almost as bad, Facebook did not offer a credible reason as to why it deemed original news information as spam and irrelevant. And quite frankly, censoring a report of questionable police actions is a chilling notion.

And then again on November 6, 2011:

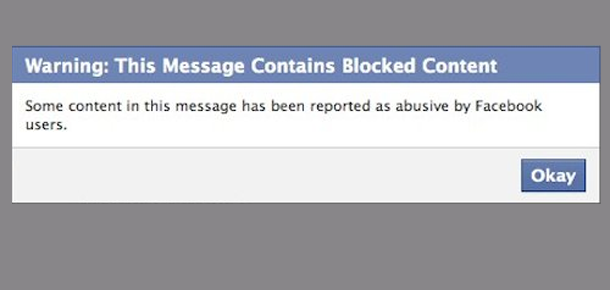

After editing our “Week In Review” segment, I attempted to share it on Facebook on my personal page. I received an even more draconian censorship notice: “Warning: This message contains blocked content. Some content in this message has been reported as abusive by Facebook users.” My first thought was, “that’s odd,” as this is an original post and was just published a few seconds prior. My second thought was, OK, that’s their problem, and I’ll take the heat if a Facebook user wants to report my site’s content as “abusive.” So I tried to post it again.

(Full disclosure: David Badash is a Facebook/Twitter friend of mine.)

Earlier this year, Facebook (whether by administrator or by algorithm) removed a picture of two men kissing because, according to their notice, “Shares that contain nudity, or any kind of graphic or sexually suggestive content, are not permitted on Facebook,” though the removed picture contained none of these things. After a substantial amount of publicity, a Facebook spokesperson quietly released a statement to one blogger stating that the removal was in error. And you know what? I believe Facebook. I don’t think Mark Zuckerberg is interested in suppressing information (Lamebook notwithstanding), but the company’s policies and procedures are far from adequate in protecting against the suppression of information. Returning to Jeremy Ryan for a moment:

Suppression of Information is a new low. Anyone with money can now buy the suppression of the message delivered by anyone they choose. We cannot stand for this and we must call for Facebook to change these policies and allow an appeal process or someone to look before banning someone. Otherwise we will set up a situation in which social media goes to the highest bidder as well.

Ryan is right. Facebook currently boasts over 800 million active Facebook users worldwide. The company’s engineers need to understand the responsibility that comes with such influence and act accordingly. At the very least, an appeals process should be put in place to keep good information flowing while actual spam continues to be minimized.

I can see why Addicting Info would be upset since 65% of their traffic comes from Facebook. Wait, does that mean they are spamming Facebook?

http://www.alexa.com/siteinfo/addictinginfo.org#

No, it just means that a lot of their traffic comes from Facebook. It’s not unusual for an activist group to receive lots of traffic that way. On my personal blog, Facebook is a pretty significant source of links.

Thanks for taking the time to comment!

Maybe it has something to do with all of the successful DMCA complaints filed against Addicting Info for plagiarism. All of their content can be found on other sites first (read TPM, Raw Story, Think Progress, AlterNet, PoliticusUSA, Huffington Post, and even most hilariously, the AP).

Or maybe because they are spamming FB again. They have tons of alleged “groups” devoted to just their links.

For example:

http://www.facebook.com/Republicansareidiots1

http://www.facebook.com/republicansareidiots

http://www.facebook.com/beingliberal.org

http://www.facebook.com/UnitedAgainstRepublicans

http://www.facebook.com/LiberalAndProudOfIt

A google search (admittedly a quick one) for DCMA notices related to Addicting Info turns up no information. I would love to see links if you have them. If you’re talking about articles that link, quote, and comment on articles from other websites, that’s SOP. If anything, it’s encouraged.

And a site with multiple authors will likely have multiple outlets on facebook sharing content. The audiences for the different pages won’t necessarily be (and in the five cases you offer, aren’t) identical. Sharing links to the different groups wouldn’t be considered spam, especially since fans opted in on receiving links by becoming fans of those pages.

I appreciate your input!

Thanks for this article. Eventually something will come along to replace Facebook and people will leap for it because Facebook does not care. For example, somehow http://pintclub.org which is a Central Florida blood drive initiative was blacklisted by Facebook. Each month the volunteer organizer of the drive creates an event:asking friends and anyone who cares to help get the site off the blacklist by using Facebook’s mistake reporting process found here: http://goo.gl/W7jJtr

They’ve ignored their mistake for more than a year and literally undermine a community initiative to save lives. Most likely because they’ve gotten too big and have bad code.

https://www.facebook.com/events/451956128251074/452886091491411

Yes, I am have also come under attack from antisemites & misogynists (sometimes they’re the same person!). It’s disgusting. The things I say back to them are never as bad as the things they say to me – things that Facebook REFUSES to get rid of! I am seriously considering just dumping Facebook altogether.

Its ridiculous as many of my chat and message is removed as saying abusive which were not and niether me nor my friends reported to facebook.